Tripling an Engineering Team in Six Months - Part Two: Planning to Scale

In my current employment as Delivery Manager at a software company I oversee the design and development teams (aka 'Engineering') that are building a brand new property management software-as-a-service platform. In 2016 we were acquired through a private investment consortium and given an injection of funding in order to 'go faster'. This blog series is a summary of the lessons I learned from tripling an engineering team in six months.

Planning to Scale

If you haven't done so already please read Part One: The Background Story which gives the overview of what happened in 2016 that led us to need to scale at such pace.

In this article, Part Two: Planning to Scale, I will dive deeper on how we actually planned the massive task we were embarking upon, the questions we needed to ask, and the important lessons we learned along the way.

I'll do this by going back through the planning process in chronological order.

Step One: Define the current state

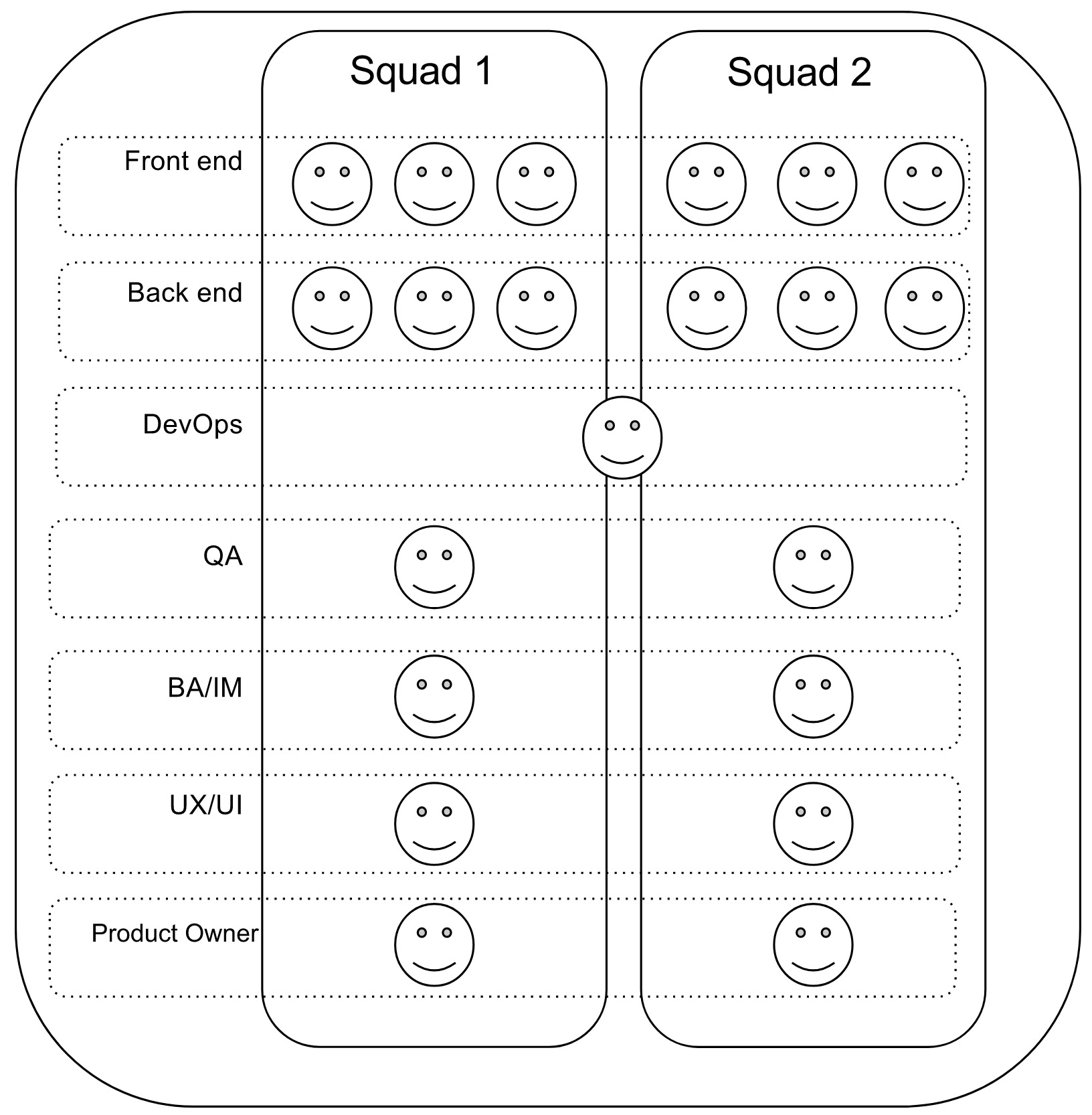

As shown in Part One, the first step for planning to scale was to draw the current state of the world. Ours looked approximately like this:

Our teams were formed roughly into two parallel squads, one for each of the key subject matter domains we were tackling.

The squads were fluid. They would form around chunks of work (we called increments), take it through to completion, and then regroup as a single team to switch roles and domains as needed. This gave the team opportunity to be flexible, promoted upskilling and knowledge sharing, and empowered everyone to own the solutions they were building.

That's not to say everything we did was perfect. We had some little problems, or areas for improvement, but we were mostly on top of them and as such we were making great progress. But when you scale an existing team you also scale any existing problems so one of the key tasks we did before scaling was to assess our current issues.

Assess current issues

"when you scale an existing team you also scale any existing problems"

Issues, problems, ineffeciencies, whatever you want to call them. All teams have some of these, no team is ever perfect, but often they fall in to business-as-usual mindset. Some people like to refer to this as the frog in boiling water parable.

In a small team the issues are generally well known and accepted or at least determined to be low priority. Everyone knows how to work around and they can get on with their higher prioity work.

But, when you scale a team those little problems become magnified. New people are joining faster than you can keep up with and the stories around why things are/aren't done a certain way a lost. Issues with workarounds become pain points, problems become more difficult, and ineffeciencies are exaggerated.

So before scaling the team it was extremely important we took stock of our current issues and tried to predict where new issues might arise.

Some of our examples:

- Increments were getting bigger and dragging out

- Varying definitions of scope for Beta

- Developers moved to new work before testing finished

There were plenty more than the list above but these give you a basic idea of what I am talking about. None of these were show-stoppers in our small team. But we needed to call them out before we scaled in order to be sure we factored it in to the scaling plan.

This is the key point. Before you scale you need to know everything - the good and the bad - about your current situation. Otherwise you'll be caught off guard when what used to be a small simple problem suddenly explodes as big painful problem.

After defining where we were, by drawing up the structure and calling out the current deficiencies, we were ready to tackle the next step.

Step Two: Guess the future

Take a guess, a wild guess, at what the future will look like. How many people will you hire? What will the teams look like? Do you need more leadership to help guide and mentor newbies? What other roles might be needed? And so on.

Take heart in the knowledge that all this will be a wild guess. When you look back in 6 months time you will probably laugh at your naivety. Plans have a habit of changing and when you are trying to scale a team fast then you find that plans have a habit of changing extremely fast.

I wont go into every iteration of our future plan. Instead here is the one we finally landed on:

Note: This was the 'final' plan and how we ended up was actually quite different.

We had already implemented our own customised Spotify Engineering model in the first phase of the project so it made sense to extend it. We added clear practice lead roles, new delivery management, new product roles, and in general adopted the tribe methodology that we hadn't needed as a small team.

We believed this plan enabled us to hit the required team size but also build a framework for potential future growth too. Each tribe was designed so that it could operate independently by including enough leadership and technical capability across all teams. That way, if we needed to, we could simply add another tribe (and manager, practice leads, product team, designers, engineers, analysts, testers etc) and it would be mostly self sufficient.

And now that we had the rosy view of the future we had to battle harden it.

Step three: Test the plan

This step is about poking and prodding the plan, ripping it apart, and trying to question every assumption made. Plans and team diagrams look great on paper but how will it actually work with real people?

Here are the big things we challenged, rougly grouped by topic:

Technical Feasibility

- Can six squads work in parallel without getting in each others way?

- Does our existing architecture support this scale?

- How would we maintain code quality?

- Does our coding process/practice need to change?

- How will we achieve CD with so many people checking code in?

Process

- Will current development processes scale?

- How will we manage 3x code changes (pull requests etc)

- How do we bring new people on and get them up to speed quickly?

Product

- Do we have enough work ready to support this size?

- Do we know what post-beta customers want?

- How will we keep on top of the product changes?

Each of those is worthy of an individual post each to answer adequately so I wont delve into them here. These are more examples to serve as the type of critical thinking that needs to happen before you commit to audacious scale up plans.

Once the plan has been robustly tested by focusing on the internal aspects it is now time to focus on the external aspects. Namely, can you actually hire the people you need? I start this by doing the funnel sums.

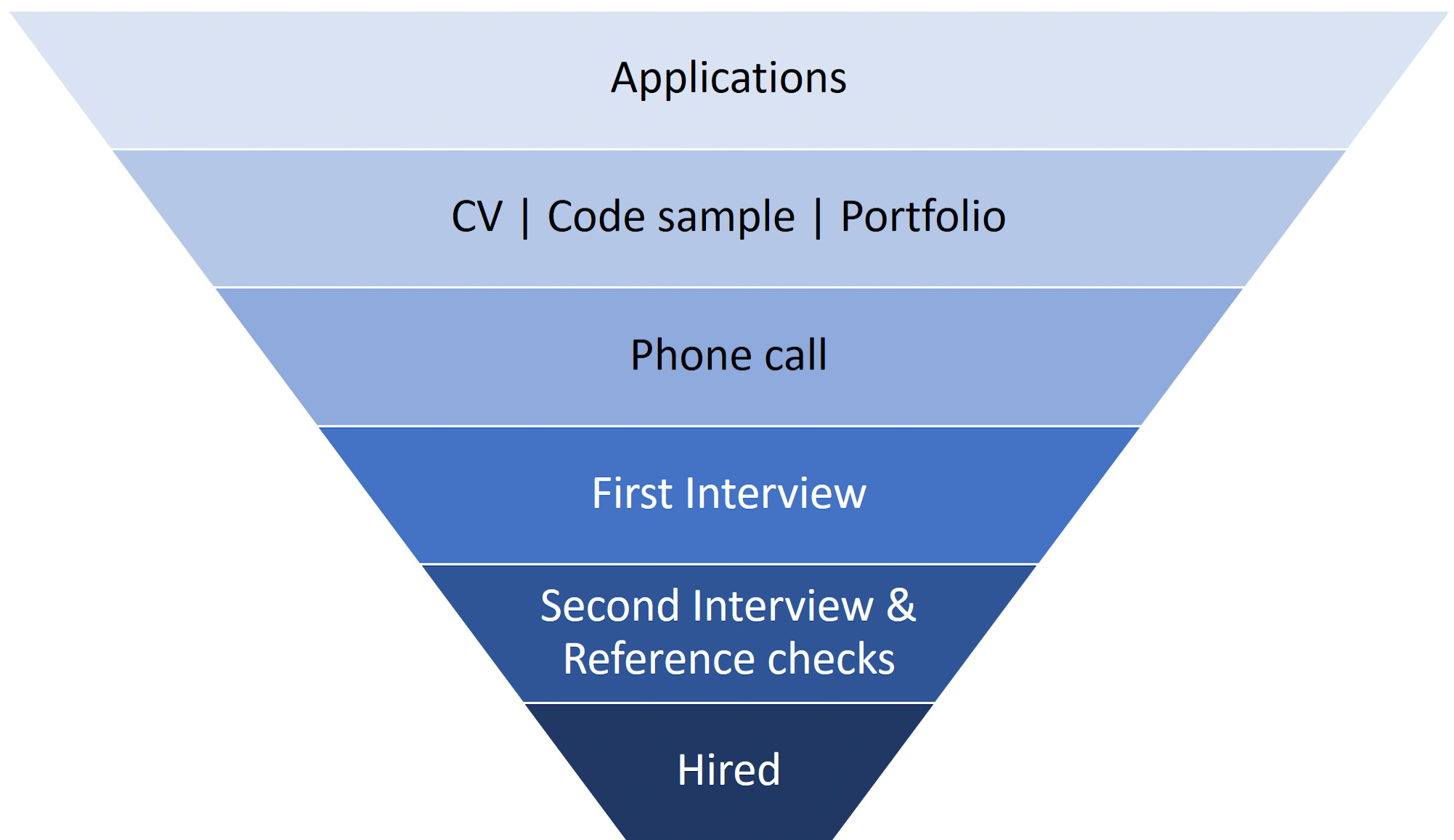

Funnel Sums

Funnel sums is working through the recruitment funnel steps to identify your current/recent numbers at each stage and then extrapolating that to the scale up plan. This test is designed to answer the question: could you actually hire that many people in your location?

I like to do this working backwards; assuming one successful hire how many candidates do I need to see at each step through the recruitment funnel in order to make that single hire. In some cases that could easily be that I realise I need to see 30-40 applications for every hire. Others could be 50, or even 100+. Knowing that number is extremely important and it is great information that can guide all the recruitment campaign decisions.

I recommend doing this type of analysis per role type being recruited for as the applicant pool, and thus applicant filter rate, varies greatly across skillsets and domains. This will also give insight into prioritising roles.

Ordering objectives

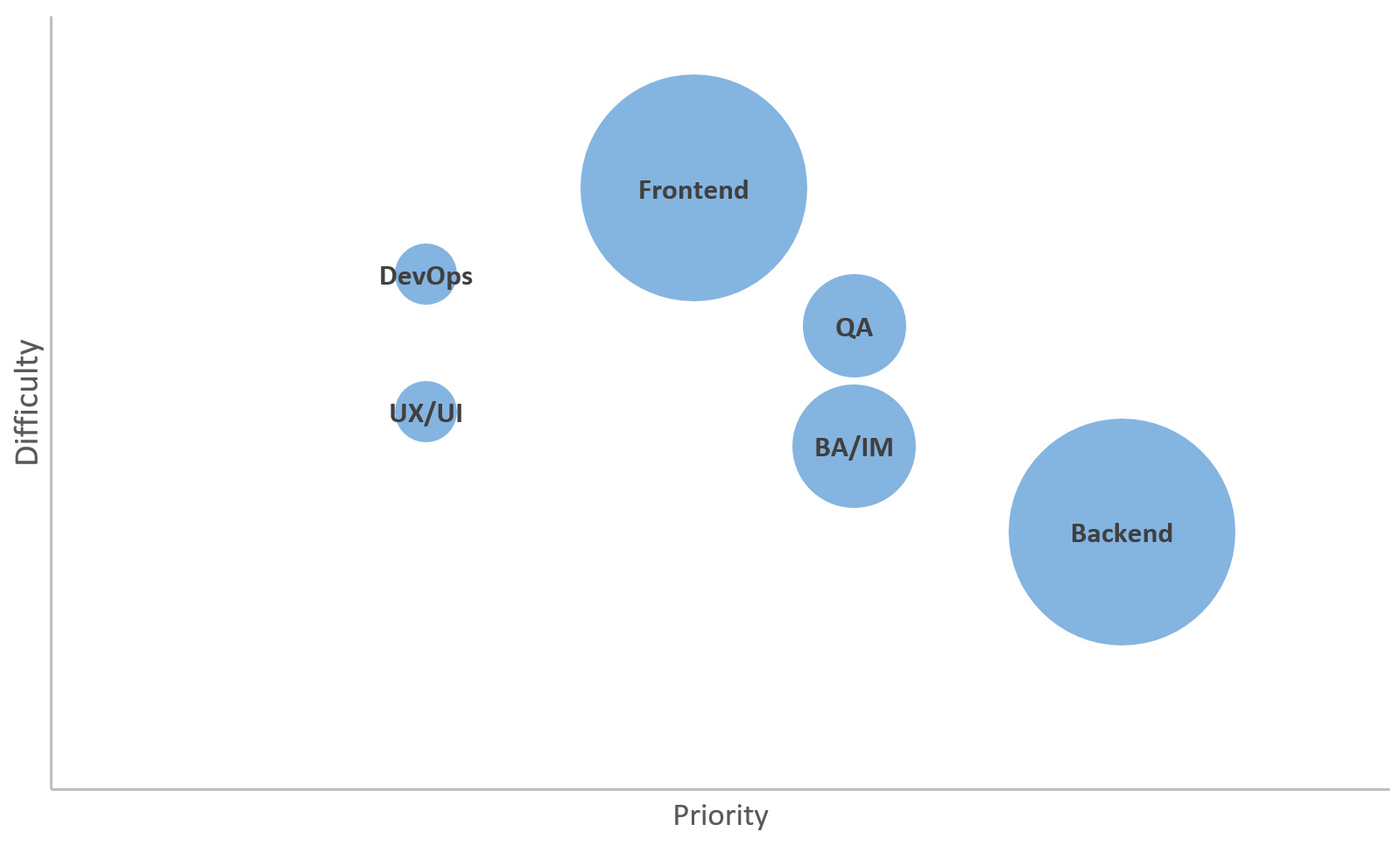

This step is about defining the order in which you need to recruit people to join your team. There are three dimensions that serve as key factors here:

- Priority - how important it is to have this role immediately.

- Difficulty - the level of difficulty, or how long the lead time is, for recruiting the role.

- Volume - the number of people needed per role type.

Ordering objectives based on three dimensions can be confusing and difficult to understand. To help demonstrate this I visualise it using a 2-Dimensional bubble chart.

Role prioritisation

The use of this chart is simple - start in the top right corner and work your way toward the bottom left corner.

It seems like a pretty simple idea because it is. And it's not going to blow your mind or deliver a crazy insight you'd never expected but it will help you double check your priority.

And that's Part Two done. Phew.

As you can see there is lots to assess before even considering whether the plan to scale is actually viable and whether or not it is worth progressing. I cannot stress the important of doing this analysis. Scaling at this intensity and at this speed is extremely hard. It's not all fairy floss and merry-go-rounds.

But after trying to tear apart the proposed team model with countless questions and debates we managed to make it through to the other side, still clutching our piece of paper with the smiley faces drawn on. We still thought it was possible. We knew it was going to be difficult but that wasn't going to deter us. All the stuff worth doing is hard right? If it were easy everyone would be able to do it.

Looking back we only had the barest idea exactly how hard it would be. But that's a story for another time.

Below are the links to all posts in this series. You can sign up to email list to get alerted for new content and if you have any questions or want to get in touch you can email me or shout out on twitter.